The Sound of the Seasons: Visualizing Music Through AI

Skills: Art Direction, Data Processing & Analysis, Graphic & Information Design

Tools: Adobe Illustrator, Figma, Generative AI, Google Sheets, Microsoft Excel, Procreate, Spotify

Project Overview & Process

AI Tools Used

Playlist Creation

Challenges with AI

Data Process

Design Journey

Sketches

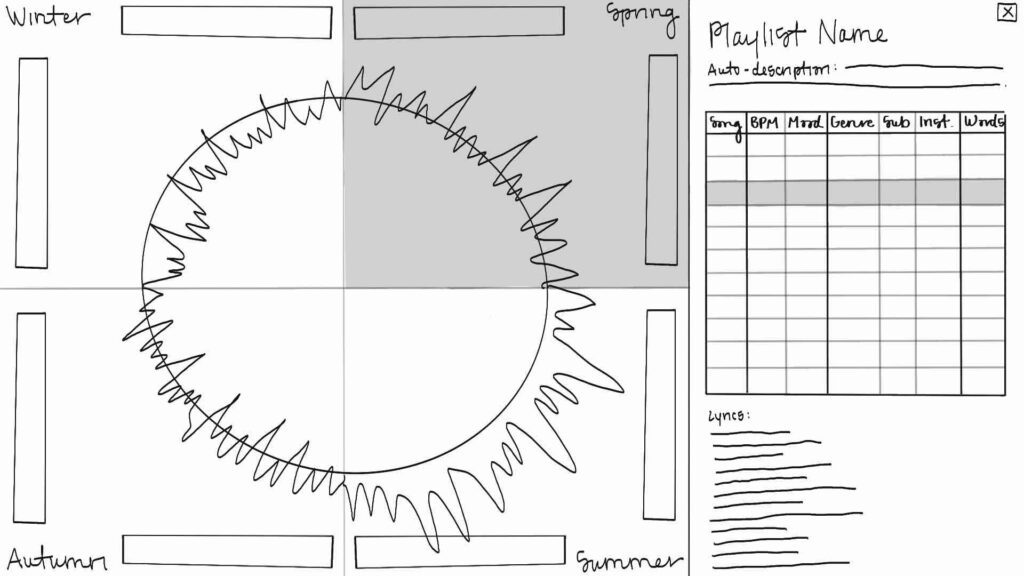

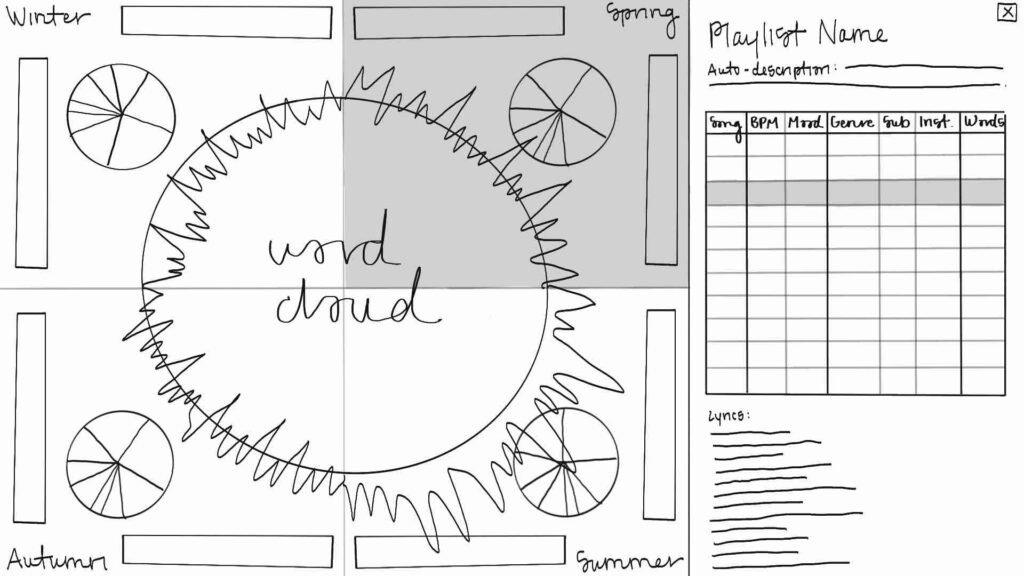

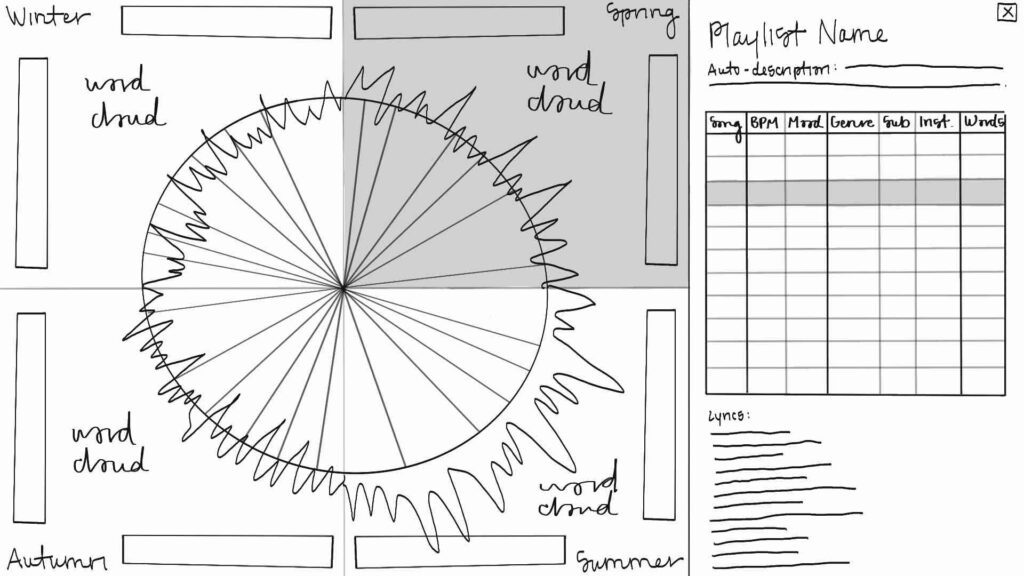

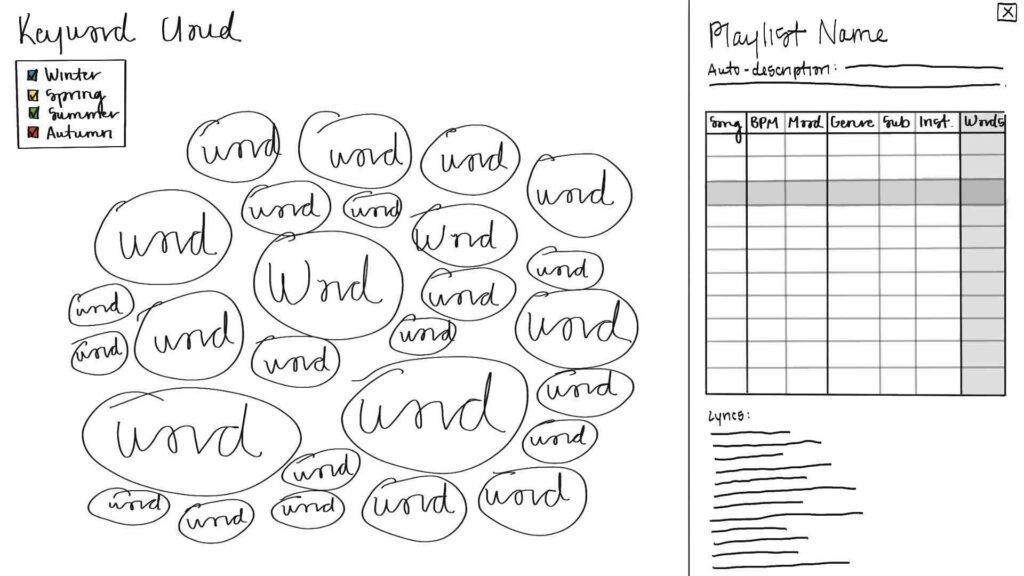

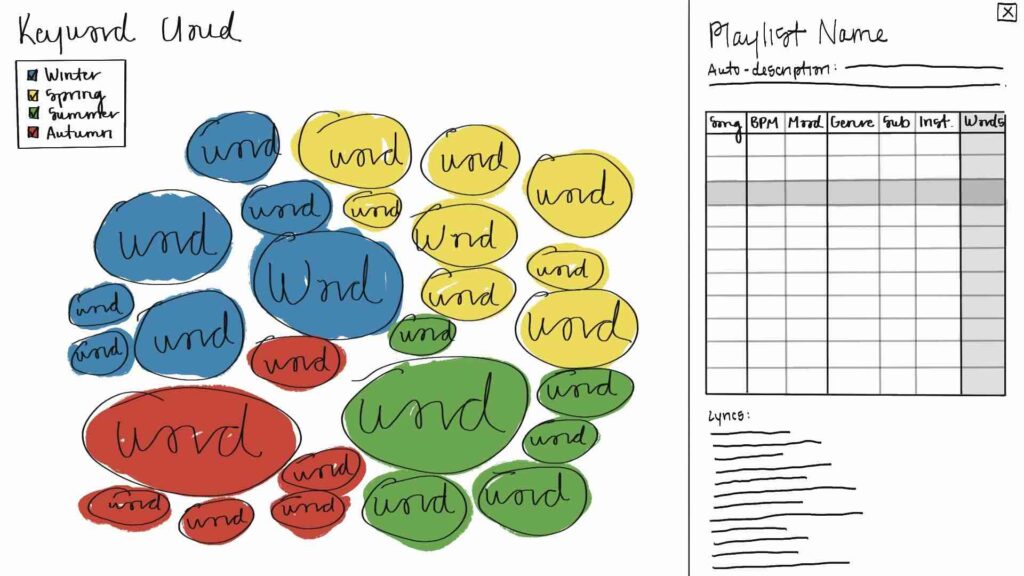

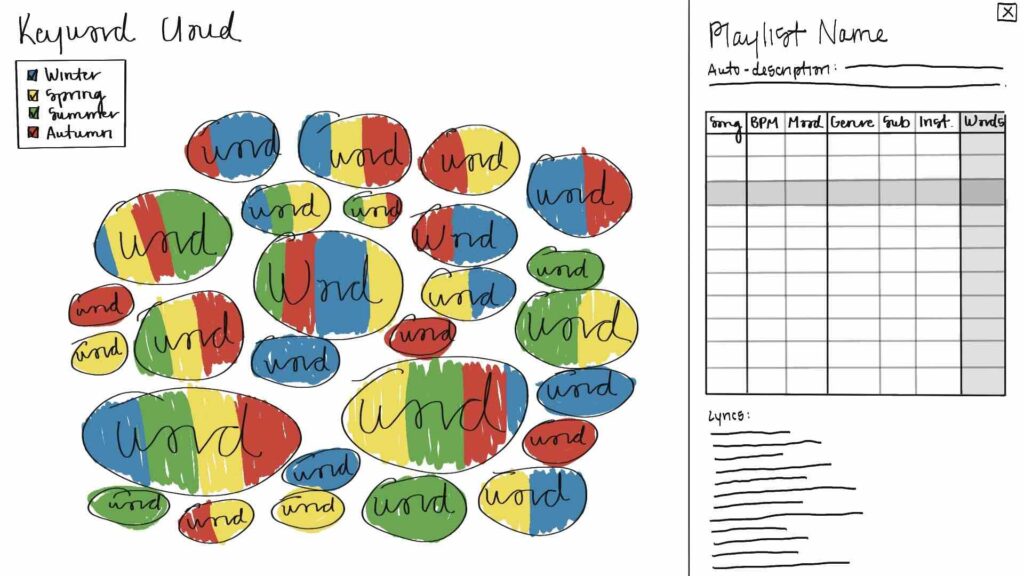

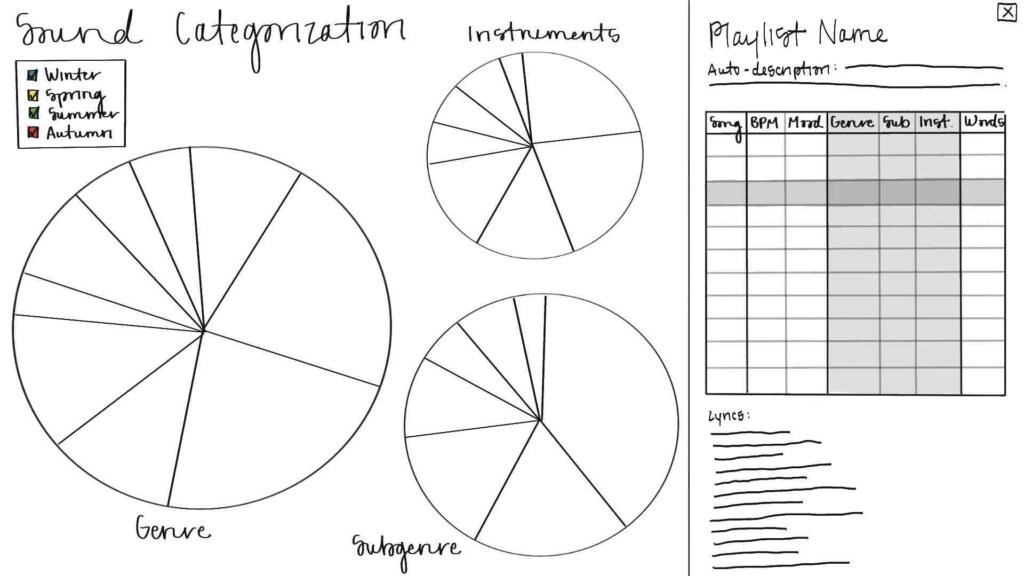

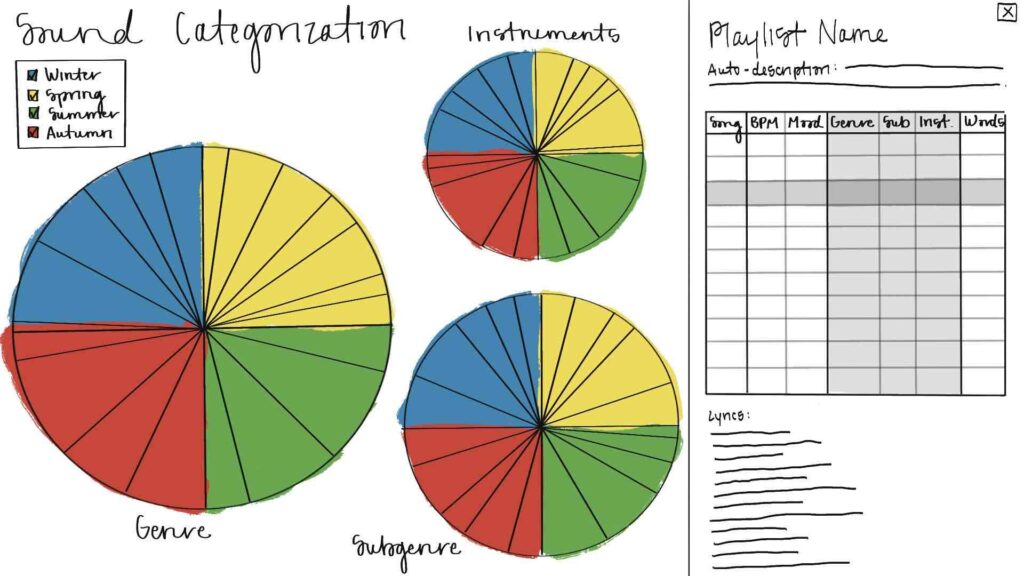

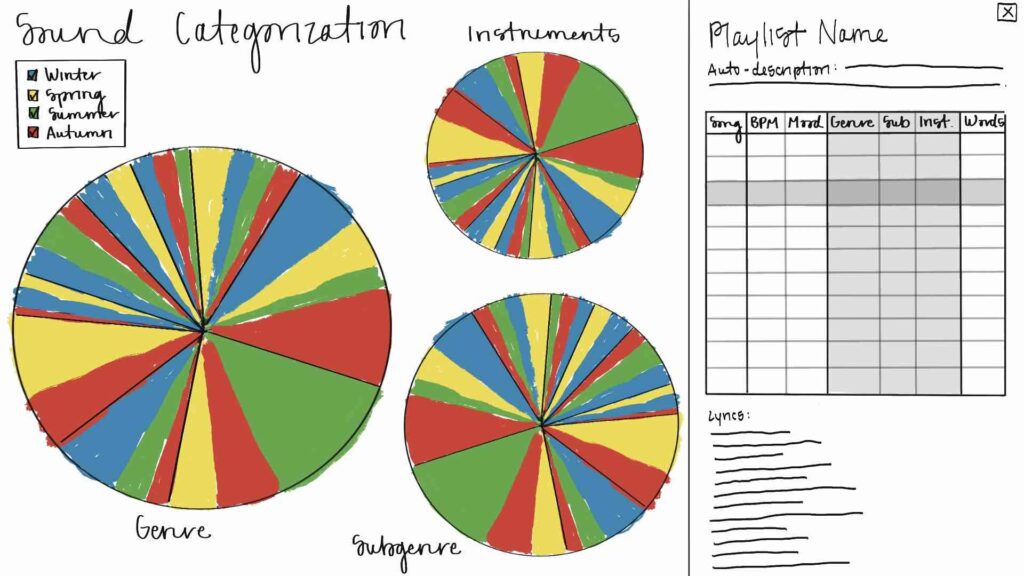

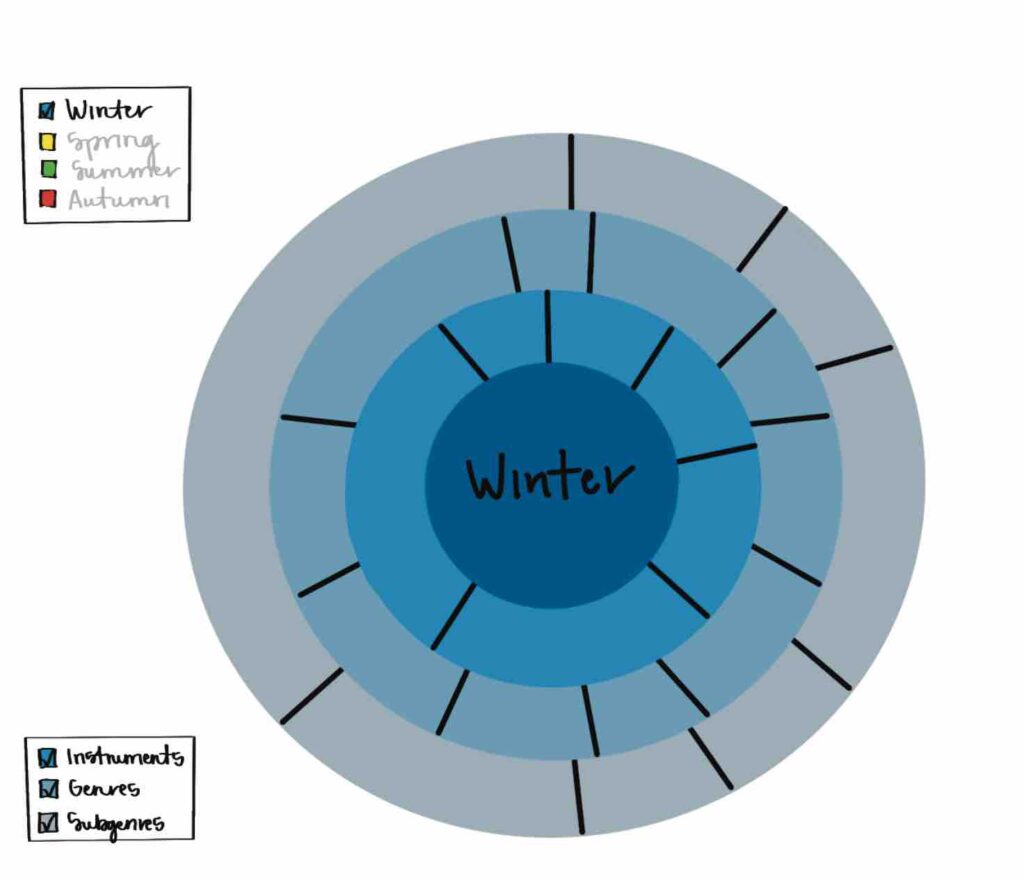

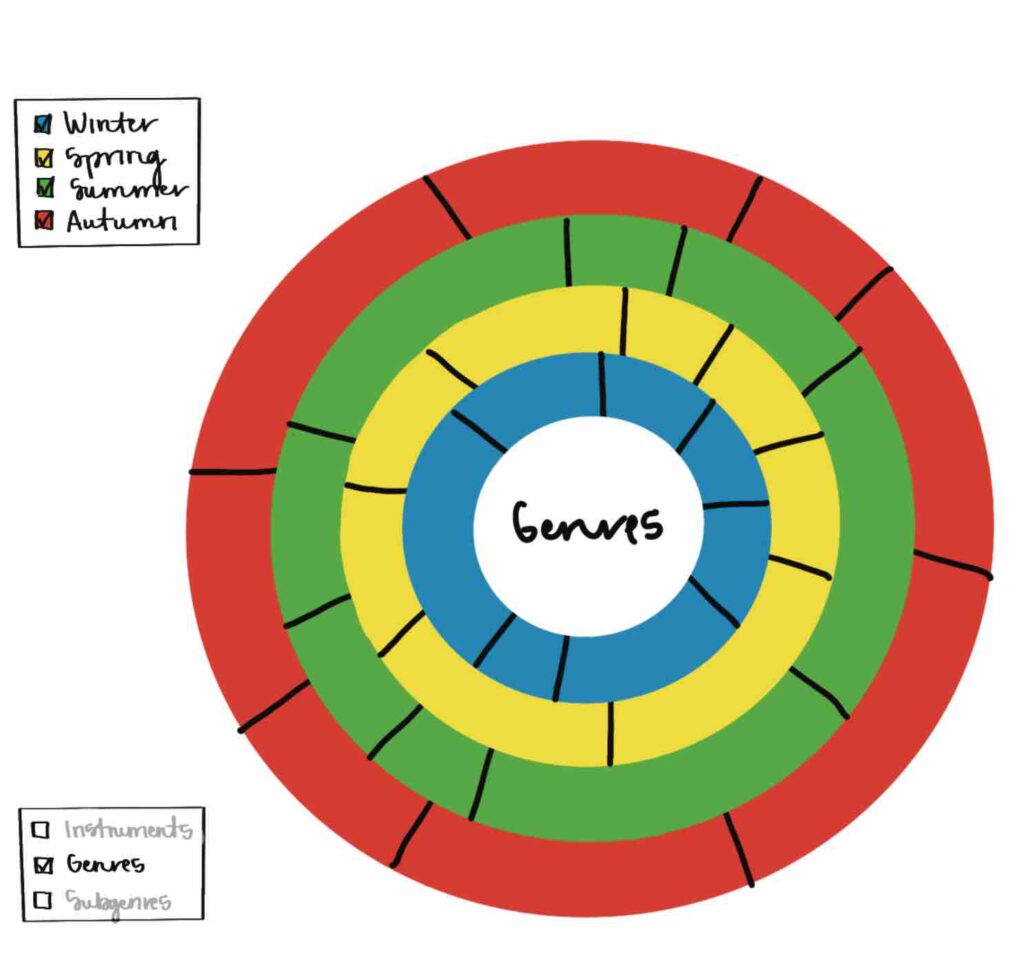

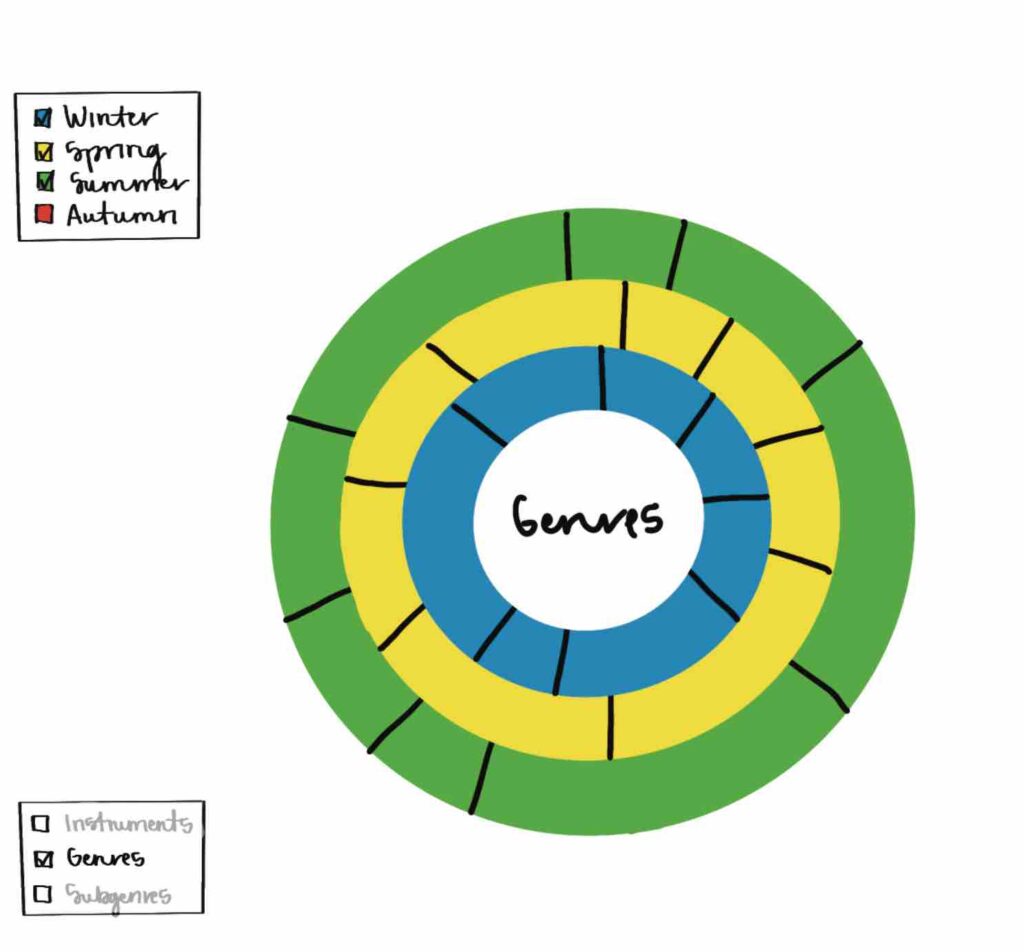

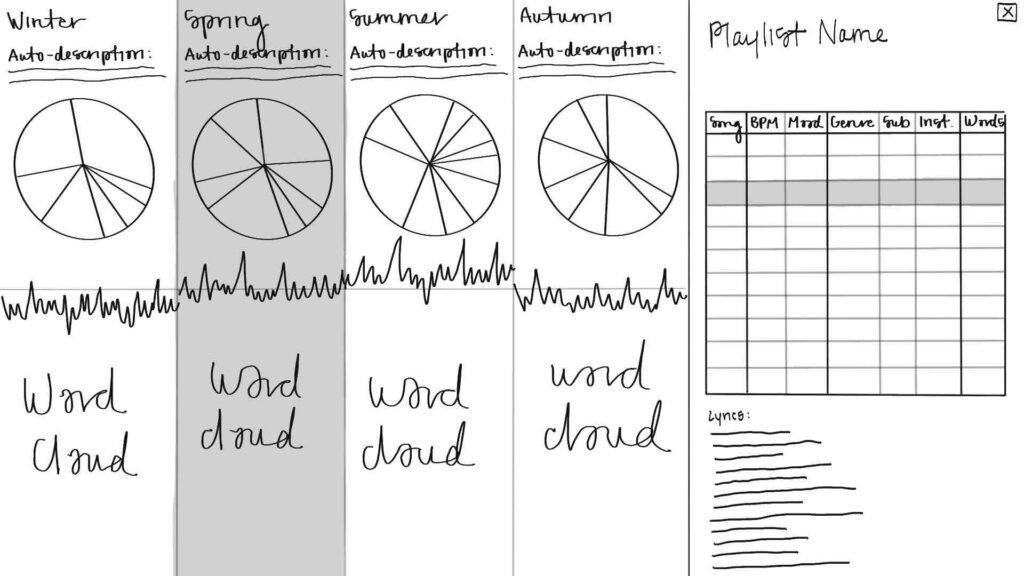

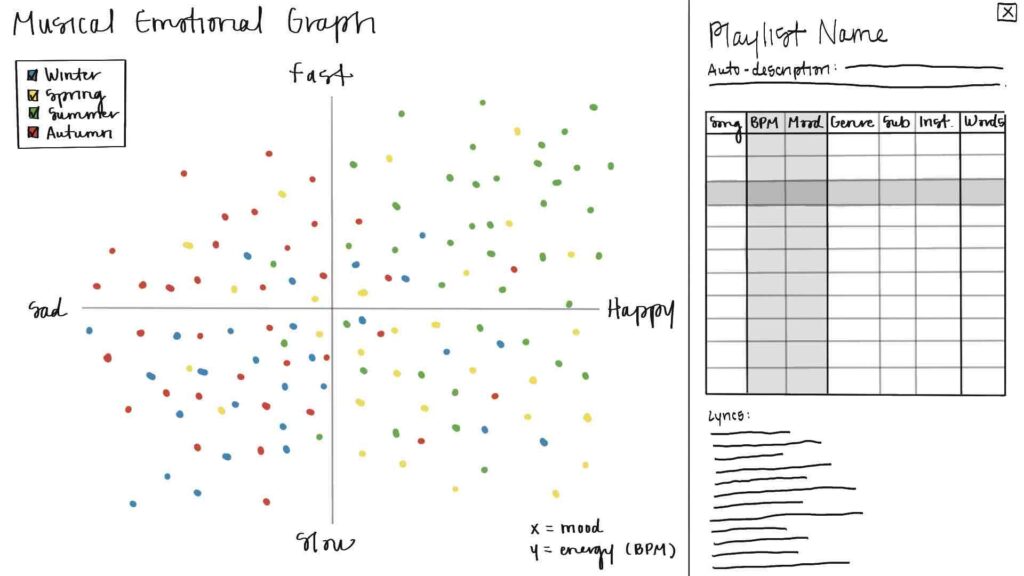

Originally, I wanted these visualizations to be interactive, coded, and mimic a platform in which other playlists could be analyzed, compared, and visualized. This is why my original sketches resemble multi-view dashboards. On the left side of the screen would be a color-coordinated, eye-catching visualization. There would be options for how many playlists and musical attributes to compare and display at once. Interacting with this side of the screen would affect the right side, which would have more “hard” data, such as an AI-generated description of the playlist, a table of songs and their musical attributes that could be filtered and sorted, and song lyrics.

Once again, due to the time constraints of the class and the limitations of the AI tools, I ultimately designed a series of static images on Figma.

Iterations

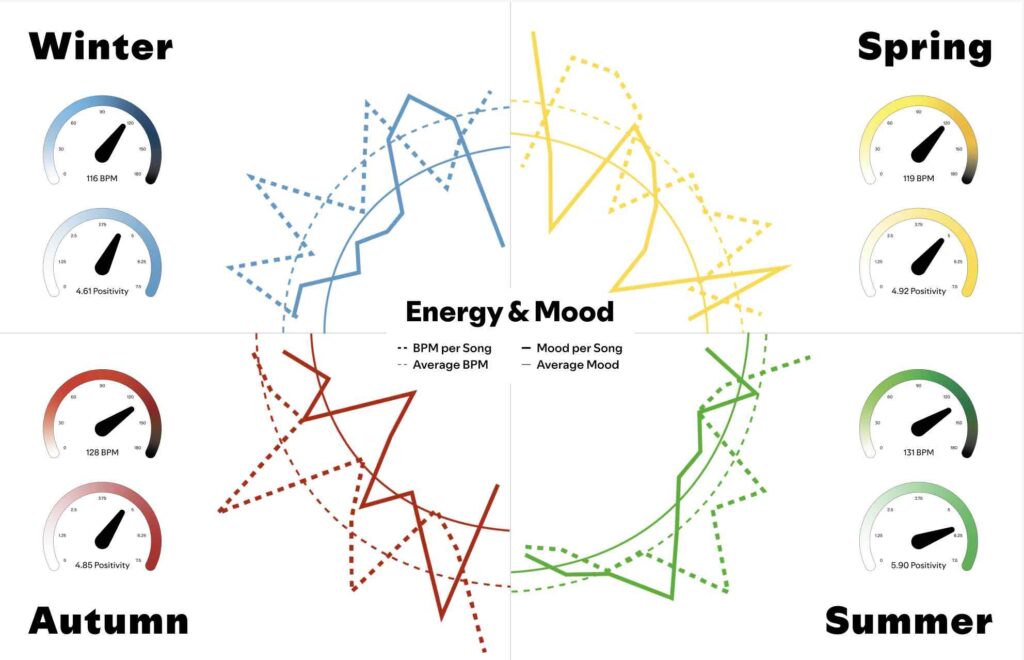

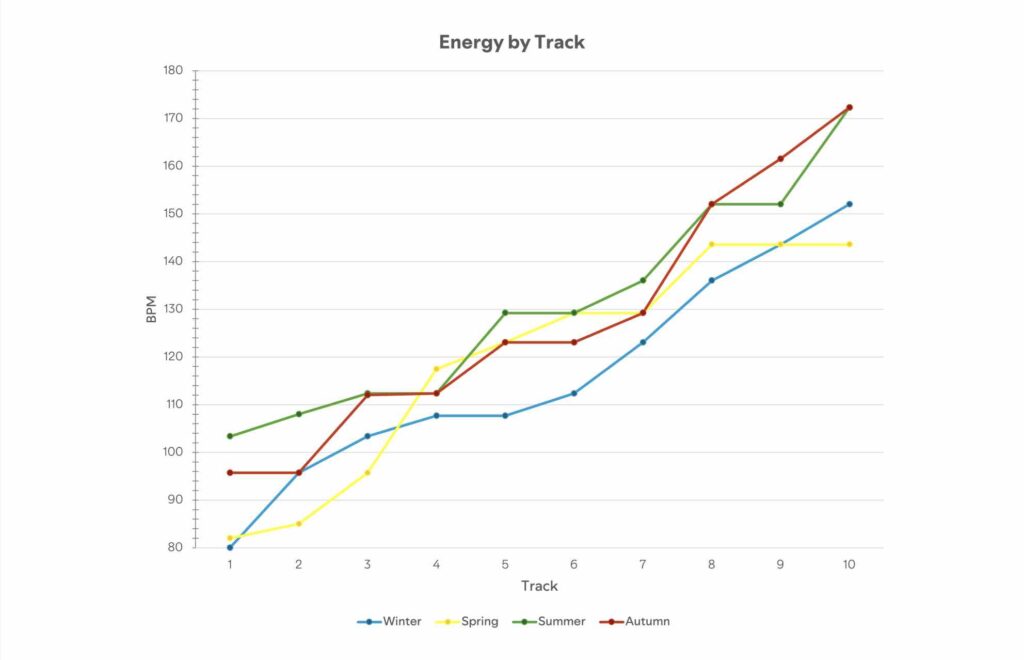

Final Product

The following images are from the Figma file I used for my final presentation.

Insights

Predictions

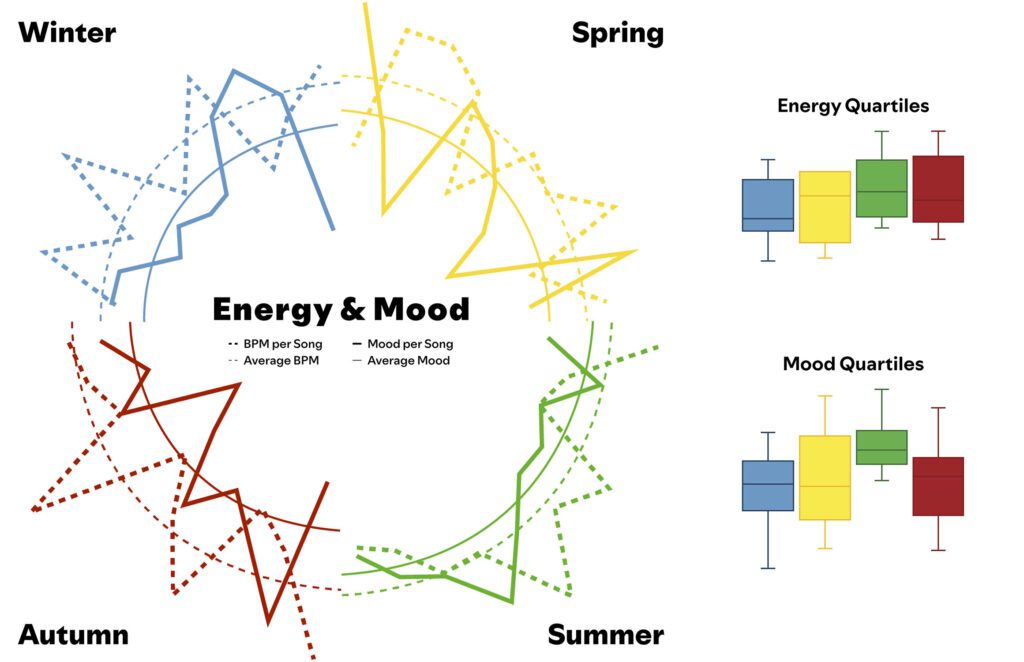

Regarding BPM and mood, I expected that the seasons will be least to most upbeat/happy in the following order (correlating to temperature): Winter → Fall → Spring → Summer. Regarding sound, I expected that the seasons will be distinctive, with some instruments, genres, and subgenres being favored by some seasons over others.

Analysis

(I’ve used red, italicized font to indicate results that did not align with my predictions.)

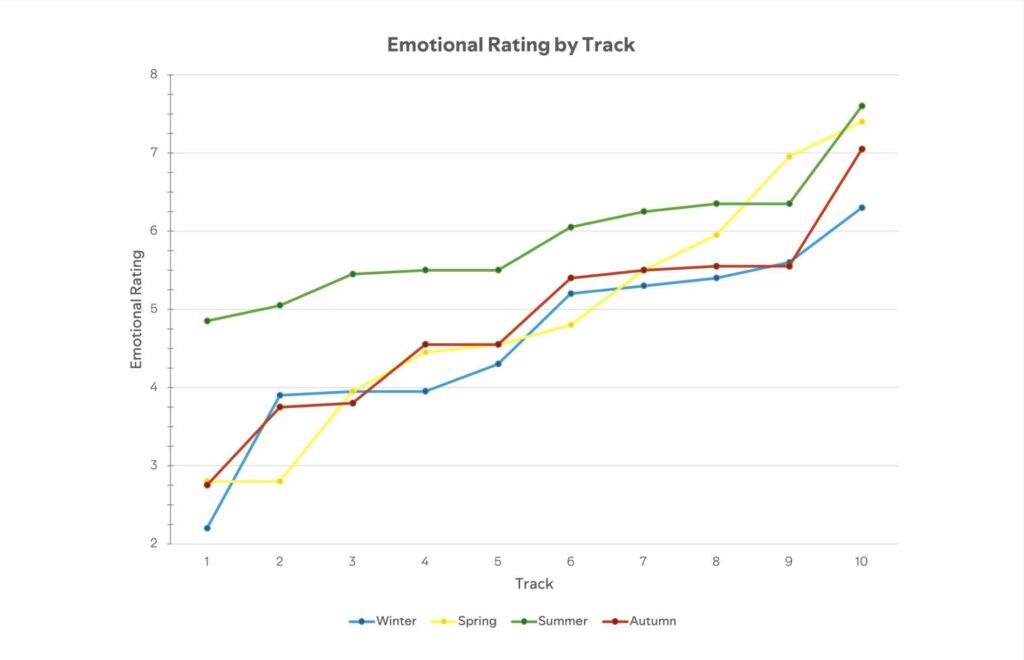

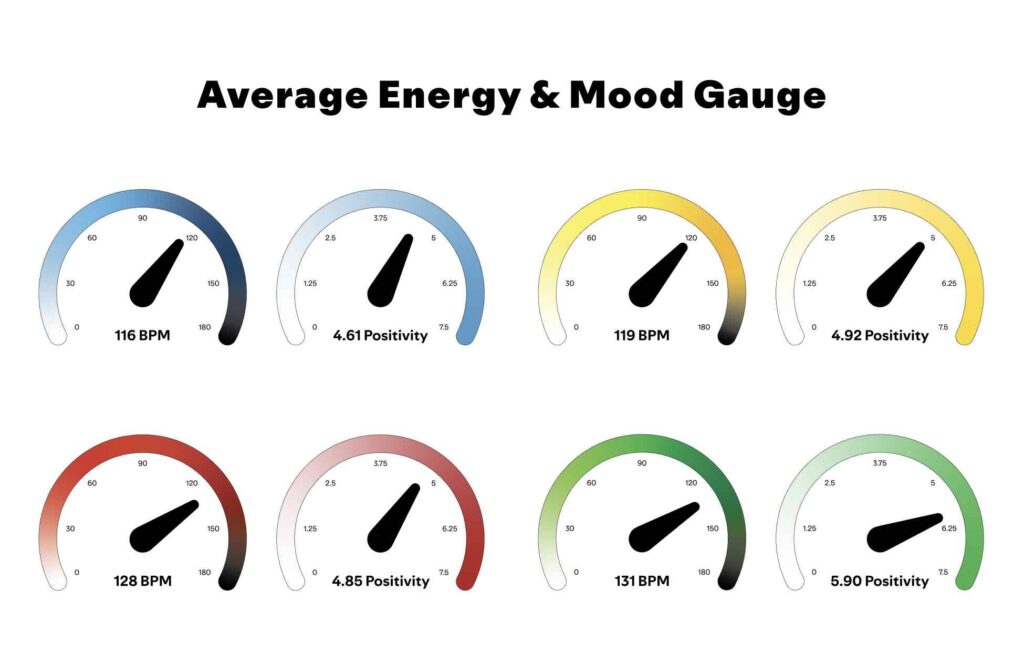

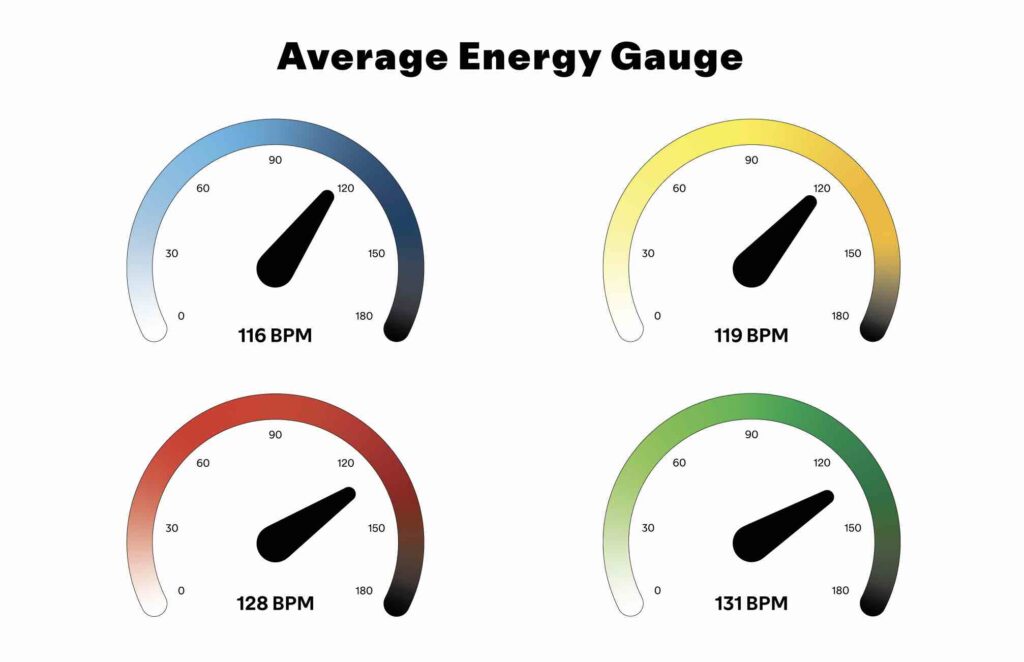

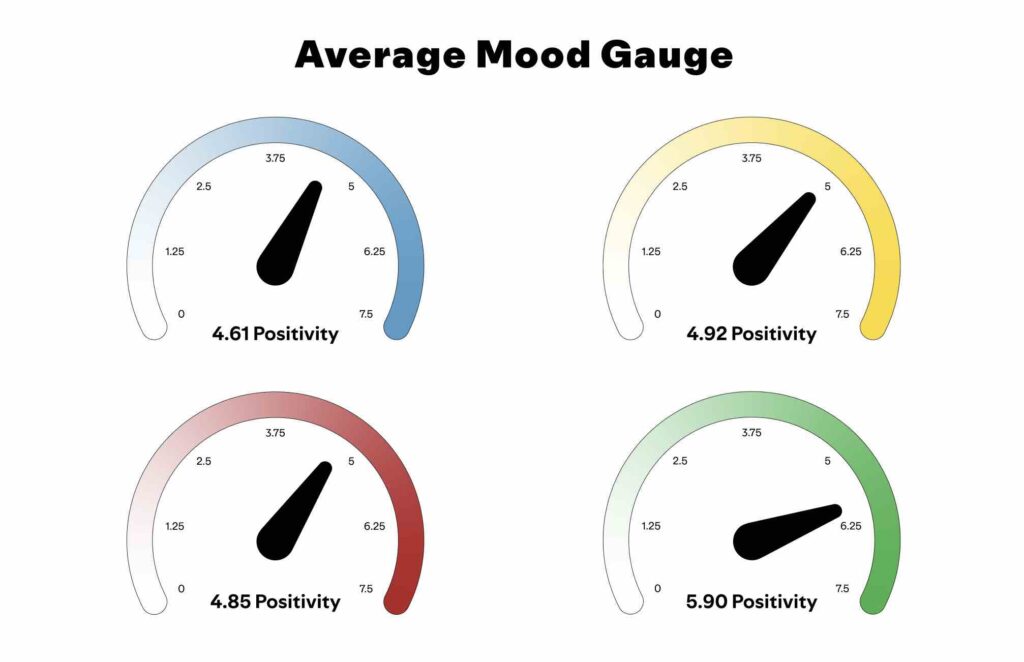

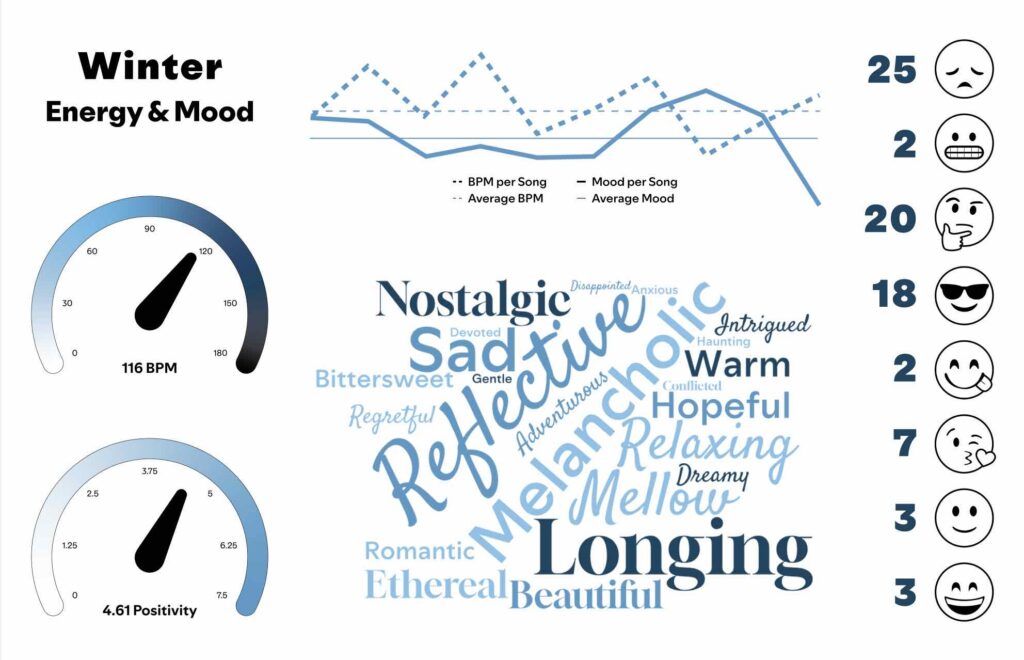

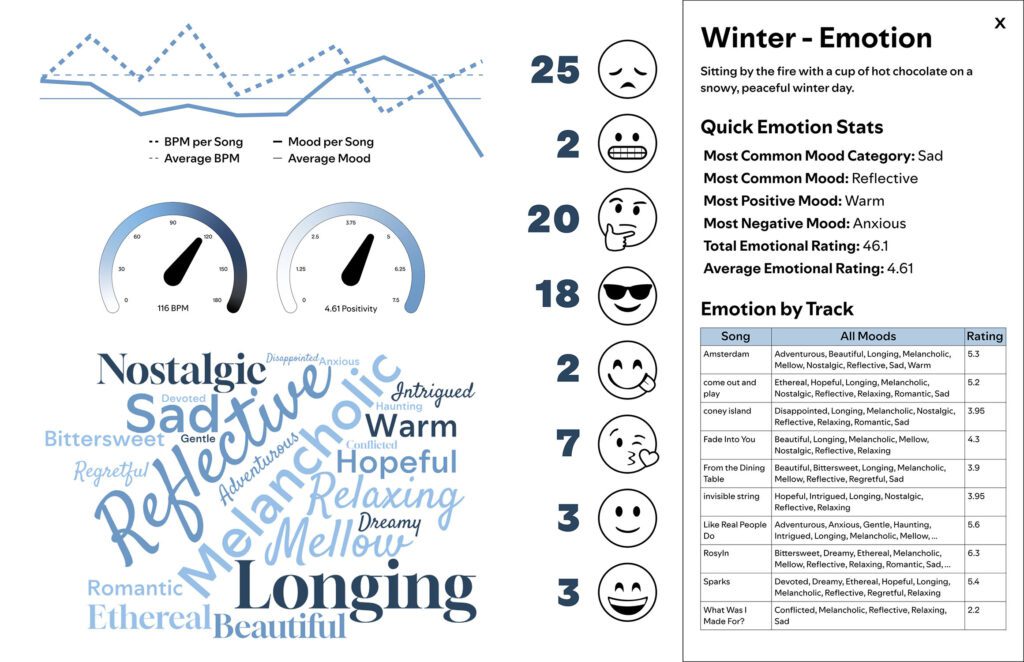

The average BPM per season, in order of lowest to highest, was Winter → Spring → Fall → Summer. Summer had the highest seasonal min BPM and highest seasonal max BPM, meaning it was the most consistently upbeat. The average mood per season, in order of lowest to highest, was Winter → Fall → Spring → Summer. Summer had the highest seasonal min mood and highest seasonal max mood, meaning it was the most consistently happy. Winter had the lowest seasonal min mood and lowest seasonal max mood, meaning it was the most consistently unhappy.

For the sad mood category, in order of highest to lowest counts of sadness, it was Winter → Fall → Spring → Summer, meaning Winter was the most consistently sad and Summer was the least consistently sad. For the introspective category, in order of highest to lowest counts of introspection, it was Winter → Fall / Spring (tied) → Summer. Introspection often arises during negative experiences because we want to understand and resolve discomfort, whereas when we’re happy, we rarely pause to question it. That said, these introspection results support my predictions for happiness.

For the very happy mood category, in order of lowest to highest counts of very happy, it was Winter → Fall → Spring → Summer. However, for the total happiness (combination of somewhat happy and very happy mood categories), in order of lowest to highest counts of happiness, it was Winter → Fall / Summer (tied) → Spring.

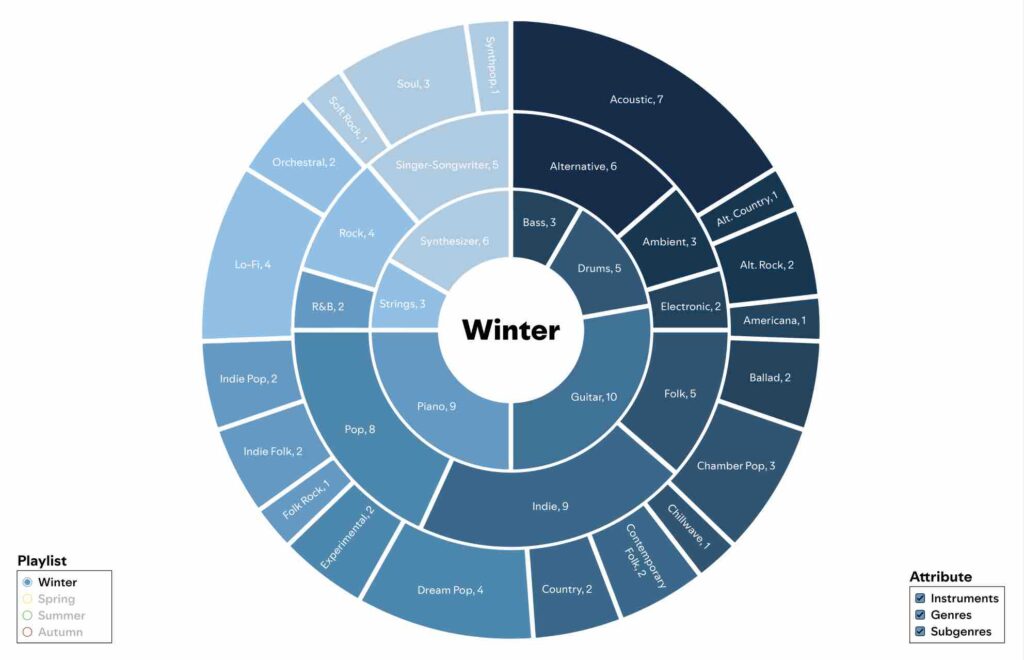

In regard to the sound categories—instruments, genres, and subgenres—summer was distinct from the three other seasons, but winter, spring, and autumn were not necessarily distinct from each other. Of the instruments, drum, bass, and synthesizer were favored in summer, while piano and strings were favored by the other seasons. Guitar was consistent across all seasons. Of the genres, electronic, hip-hop, and R&B were highly favored in the summer, while ambient, folk, and singer-songwriter were favored by the other seasons (summer had zero or only one instance for each of these). Indie, pop, and rock were fairly consistent across all seasons. Of the subgenres, dance, rap, and synth-pop were almost exclusively found in summer, while acoustic was the highest subgenre for all 3 other seasons. It was harder to compare any other subgenres besides these 4 due to the high number of subgenres and low number of songs per seasons.

For the most part, my predictions came true. However, it must be kept in mind that there are only 25 songs per playlist, and due to the limitations of the AI, I only analyzed 10 songs from each. Due to the small sample size, the results were easily swayed by outliers and there was a larger margin of error.